Building a Static Site with Azure DevOps

I’ve had this idea of building a static site blog for a while and while diving into devops decided that it was time to execute.

Having managed clunky WordPress blogs in the past, I’m excited to take a somewhat simplified approach to blogging and overall ditching a LAMP stack for good ‘ol blob storage.

So what will we be using to get us up/running?

- Azure DevOps (version control, deployment pipeline to blob storage)

- Azure Storage (Blob, Static Site)

- Azure CDN (optional - using for custom domain + https functionality)

The Azure DevOps build will be heavily simplified, using a single environment (what no dev?!?) and single deployment pipeline (we’ll perform builds manually with a local hugo install).

Blog management flow:

- Write/edit posts in local repo using hugo local web server.

- Push changes to Azure DevOps git repo.

- Utilize a simple pipeline to copy changes to Azure blob storage.

Let’s dive in!

-

Setup your Azure DevOps organization and associated project, some Azure docs below.

- I went with a private project since I don’t intend for the actual hugo “site” structure to be publicly available.

- https://docs.microsoft.com/en-us/azure/devops/organizations/accounts/create-organization?view=azure-devops

-

Clone your repository locally and follow the steps in the hugo “quick start” guide to get your initial site prepared.

- You can create a new hugo site directory using the steps below and copy the contents into your repository folder.

- https://gohugo.io/getting-started/quick-start/

-

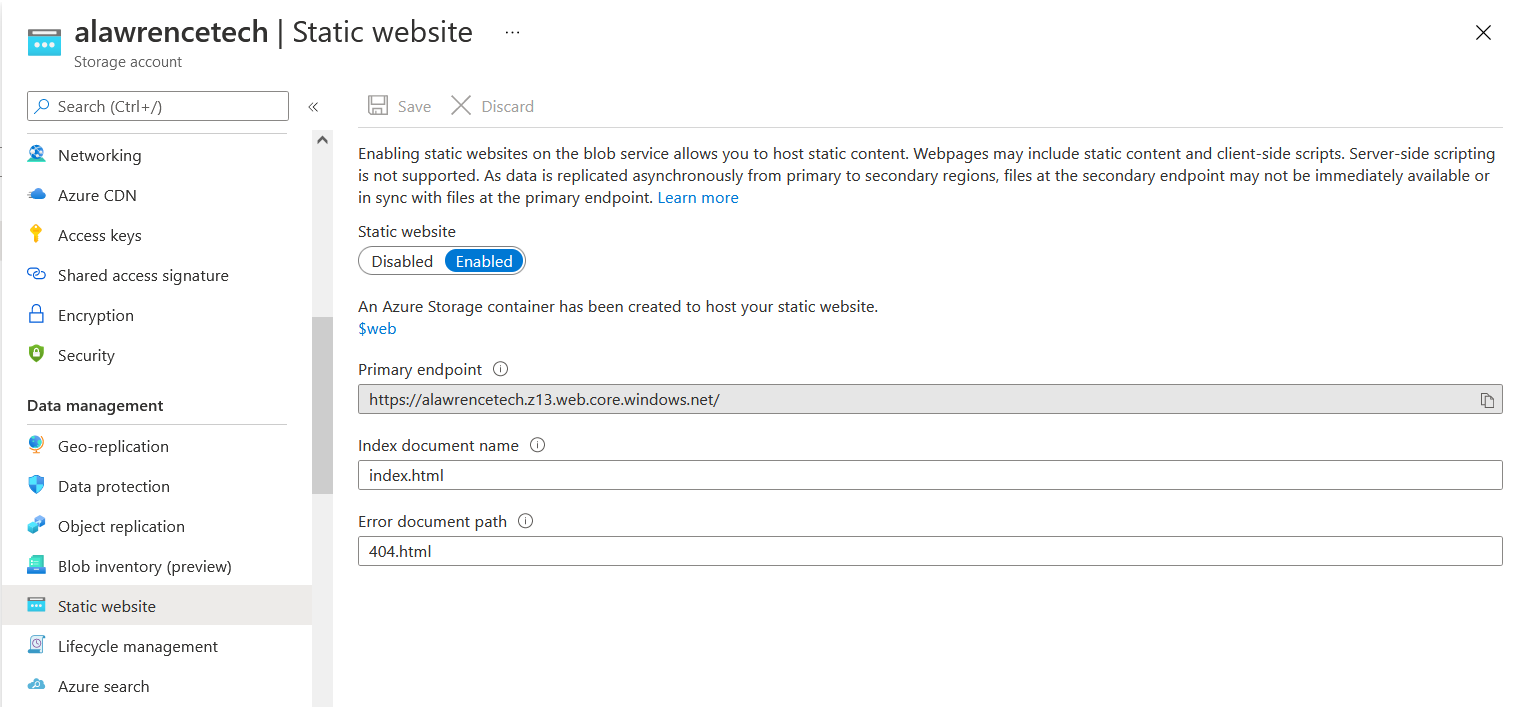

Setup a storage account and then a static site storage container.

- Create a new storage account.

- Setup a static website storage container.

- This is super easy, go to “Static website” under “Data management” and set the slider to “Enabled”.

- Then add the associated index.html and error page .html file names associated with your theme.

- This will allow you to navigate to the root of your site without specifying “index.html” in your path. Remember, Azure blob is our “web server” in this scenario.

- This will allow you to navigate to the root of your site without specifying “index.html” in your path. Remember, Azure blob is our “web server” in this scenario.

- You should now have a container within your newly created storage account that we can use to upload your hugo “public” site directory to.

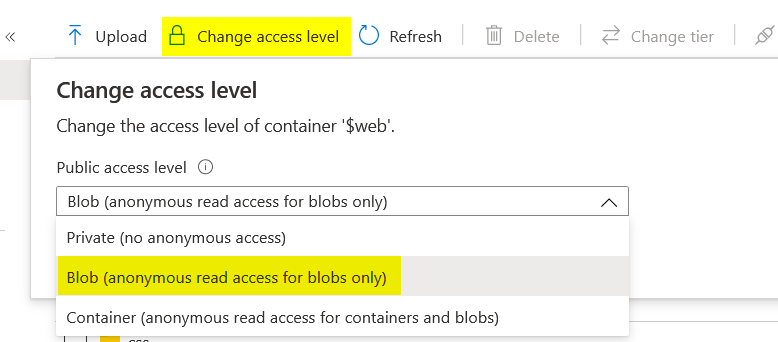

- Adjust the container access level to allow it to be publicly accessible.

-

Create a new “Azure Resource Manager” service principal following the guide below. This will be used by the Azure DevOps pipeline to authenticate and push site changes to the Azure storage container.

-

Create a new Azure pipeline file called “azure-pipelines.yml” in your repository, populating it with the YAML text block below. Make sure to update the name of the service principal you created and the name of your storage account.

- This pipeline will copy the “/public” directory (using azcopy on our pipeline agent) of your repository which contains the Hugo site build to the Azure container.

- Some additional arugments are added to ensure directories are copied recursively and proper content type is set for uploaded files.

- Once complete, commit/push your changes. You should now have a “pipeline” populated in the Azure DevOps dashboard.

- NOTE: I usually would go with a linux vmImage, especially in this pipeline scenario, but I ran into a million and one issues with the AzureFileCopy task in linux and found that most folks just ran this task in windows to save headache. Yes it’s an odd issue.

trigger:

- master

pool:

vmImage: 'windows-latest'

steps:

- task: AzureFileCopy@3

inputs:

sourcePath: public

azureSubscription: <service principal name>

destination: azureBlob

storage: <storage account name>

containerName: $web

additionalArgumentsForBlobCopy: /S /Y /SetContentType

cleanTargetBeforeCopy: true

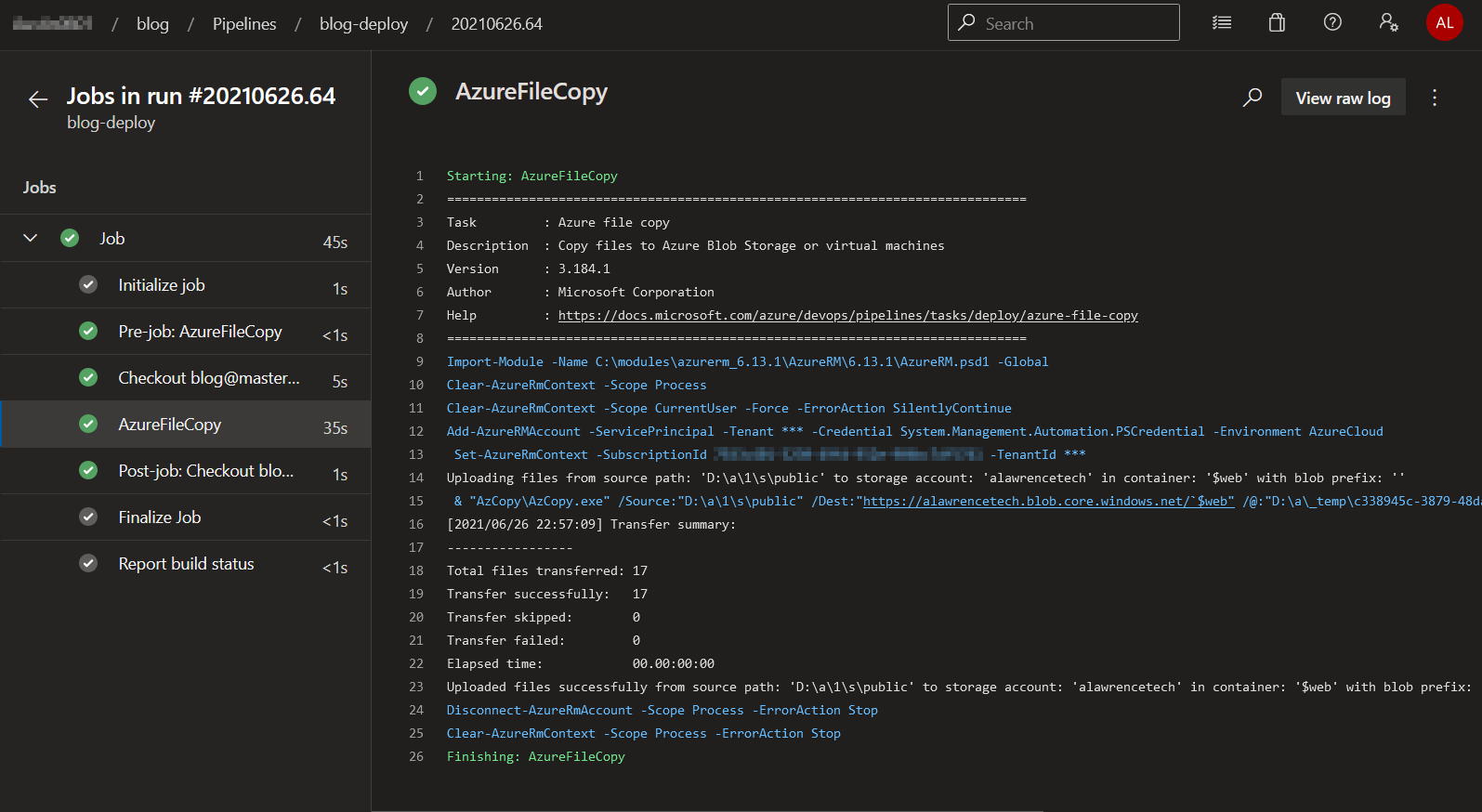

- Testing your pipeline and hugo site accessability.

- Now it’s time to make sure our pipeline is working and properly deploying changes to the site.

- On your hugo site, create a new page/post and commit/push it to your repository.

- If all goes well you should see some “green” in the pipeline jobs section of Azure DevOps.

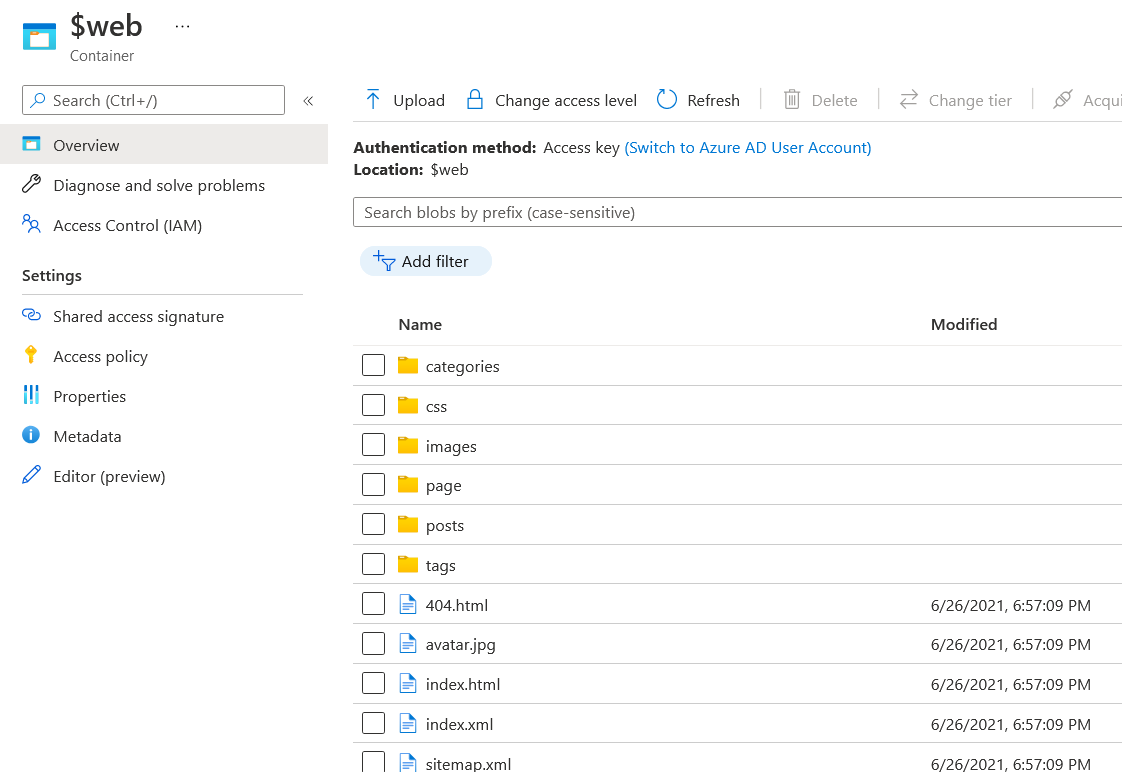

- Check on your Azure storage container blobs, see if the public directory from your repo has successfully been copied and that mod timestamps look correct.

- Remember, anytime you push a change to the primary branch of the repository the pipeline will be triggered.

That’s it! You should be able to reference your hugo site by the primary endpoint URL found under your storage container’s static site settings and access your site. If you want custom secure (https) domain functionality, Azure CDN is your friend.

I’m sure there are some quirks or adjustments that could be made to make this process way more efficient. For example, the pipeline tasks could really be setup under a “release” pipeline and approvals/other functionality could be added.

Overall I’ll tinker with this setup and see how it goes. Hope this info is helpful!